We’re halfway through our solopreneunicorn sprint. Yesterday, we built an engine for creating ideas for “Content bundles,” which, if you didn’t catch the article, are sets of thematic, cross-platform content ideas (example: creating a blog post and a set of YouTube shorts from the same general topic).

Though it’s fair to expect any entrepreneur to be an expert in the product they are selling, it’s equally fair to assume that a single person or small team will inevitably need to engage with target customers or markets that they are not overly familiar with at some point during the normal course of business. Today’s goal is to add a feature that helps us easily catch up to speed when faced with an unfamiliar topic.

Researching new markets

Take a second to think about this question: when faced with an unfamiliar prospect or target market, what is the first thing that you would do to catch up to speed?

For me, it would be heading over to google to see what I can find (shoutout to my wife, who still has a library card and would love the excuse to hit the stacks). I’d do some keyword searches, find some helpful articles or YouTube videos to consume, and then repeat the cycle. If I was lucky enough to have a friend with expertise in the area, I’d probably speak to them as well.

The main issue with this approach is that googling around for information becomes exhausting very quickly. Trying to find good signals among the noise of SEO-optimized (I’m aware of the redundancy) fluff is a challenge, and there’s something about all of the different colors, advertisements and brands that one gets confronted with when conducting search engine-based research that leads to quick burnout.

ChatGPT can serve as a nice alternative to google for research, but with its hallucinations issue, it’s hard to totally trust its results without cited sources.

Perplexity is an LLM-enhanced search tool that does this very well. We want to implement something similar to Perplexity in our platform, so that we can use the search results to assist with every other aspect of the system.

Note for my reeders: since the original recording of this video, OpenAI added what looks like a competitor to Perplexity to ChatGPT. Read more here.

The need for quality research…the simplicity of tools like ChatGPT…links to source content…is there a way to combine all three of these?

LLM agent systems

Of course there is. Here’s a fantastic explanation of AI agent-based workflows from Andrew Ng, which I’ll borrow from for a short explanation of of the concept: the difference between a non agentic LLM workflow and an agentic LLM workflow is similar to the difference between 1) asking someone to type an essay from start to finish without using the backspace and 2) allowing someone to pause, think and make revisions while writing. ChatGPT (pre-strawberry) is the perfect example of a non-agentic workflow: it produces a response from start to finish without any pauses.

Agent-based systems don’t only focus on self-reflection and reasoning, they also often focus on allowing LLMs to use tools. Tools can be things like a method for accessing the internet, the ability to write database queries, et cetera. The way in which these tools are provided to agents is too technical for the scope of this post, but leave a comment if you’d like to learn more.

When combining the ability to self-reflect and the ability to use tools, we can do things like asking an agent system to research a topic on our behalf.

STORM

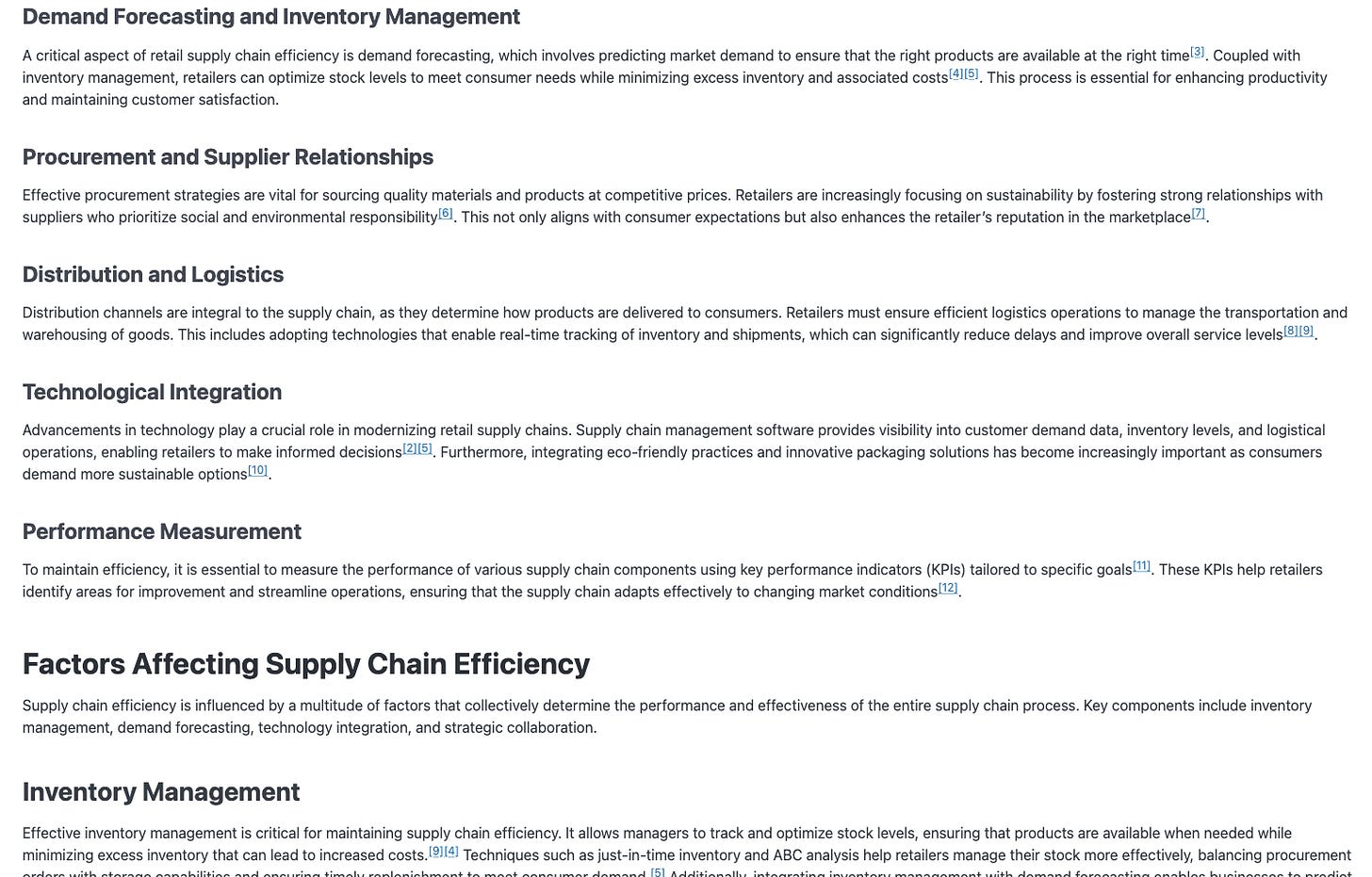

The team at the Stanford Open Virtual Assistant Lab has created an amazing agent-based research tool called STORM, which is available to try online, but also can be installed as a python package. The idea behind the tool is pretty simple: take a research topic, do an extensive internet search about the topic, and then use AI to generate a Wikipedia-style article with links back to the original sources of information.

Remember, today’s goal is to use AI to help us quickly catch up to speed on topics that we want to produce content about. Implementing STORM within our application seems like a perfect way to reach our goal.

Here’s a look at the inputs and outputs after implementing this in our app:

Input: “Retail supply chain efficiency” (one of yesterday’s topics)

Output: Custom article about our input

I don’t want to include too many screenshots in here, but the article keeps going. As you can see, sources are referenced throughout so that we can dive deeper on any topic and verify the information immediately.

To me, this is nothing short of amazing, and will certainly speed up my research process. I hope that readers of this article will find this as compelling as I do.

What a time to be alive. See you tomorrow!