Day Eight - A Learning Machine

A positively reinforcing cycle

I can’t believe it’s already day eight of our solopreneunicorn journey. At the time of writing, this series has close to 1000 views! I know those numbers are peanuts compared to the big publications out there, but I’m really happy that this content is resonating with you.

In terms of tech, we’ve done a lot of work to our application at this point. We started with a simple X idea engine, expanded to other platforms, added ways to research new markets, designed specific email campaigns, all while maintaining data storage features to help us cut down on repetitive work and to measure our performance.

That’s a lot of work! Today, we get to reap the rewards.

Let’s indulge!

Memory, revisited

Something that we’ve discussed already is that LLMs do not remember anything from one invocation to the next. What might feel like a flowing conversation between you and ChatGPT is simply an expertly crafted optical illusion. The way in which this conversational feeling is created (not including the text streaming interface, which delightfully mimics the way in which human language flows in conversation) is that each time you ask a new question to ChatGPT, all of your previous questions and ChatGPT’s answers to those questions are prefixed to your current question.

For example (disclaimer: I don’t work for OpenAI so take this with a grain of salt, it’s also simplistic and leaves things out for the sake of clarity):

===== FIRST QUESTION: WHAT THE MODEL SEES =====

Context: [empty]

You: “Can you help me come up with a dinner idea?”

===== FIRST QUESTION: HOW THE MODEL RESPONDS =====

ChatGPT: “Certainly! How about you try out this fun salad…”

===========================================================

Now, watch how the context of the first question and response is included in the second interaction with ChatGPT.

===== SECOND QUESTION: WHAT THE MODEL SEES =====

Previous: “Can you help me come up with a dinner idea?” => “Certainly! How about you try out this fun salad…”

You: “Please more carbs!”

===== SECOND QUESTION: HOW THE MODEL RESPONDS =====

ChatGPT: “Salads are typically low on carbs, so let’s come up with something different. How about you try a…”

==============================================================

This is incredibly interesting and I’m not trying to knock it! I think that something like “past experiences that we recall in the process of attending to the present moment” is a great description of human memory, so this context-based prompting is totally logical. I’m not suggesting that there is anything unimpressive with how these things work - it’s just important to keep these mechanisms in mind when building or working with language model-based systems.

Our goal when working with software that is designed to mimic humans is to increase the likelihood that what is produced mimics the truth (defined as proximity from the ideal, for outputs don’t always have an objective truth) as closely as possible. Because we’re using off-the-shelf models for this series, the only variable that we can really play with to move us closer to the ideal output is what gets passed into the prompt (the “Context window”).

This is how we create an LLM based system that learns: we constantly update the context window with relevant historical data.

Retrieval-Augmented Generation

RAG is one of the most commonly used acronyms in the LLM space these days. In short, what RAG refers to is the process of retrieving data that is relevant to the task that you are asking an LLM to perform, so that the relevant data can be included in its context window.

Our goal is to create a system that learns over time, and we’re going to do that by implementing an agent-based RAG system with the performance measurement data that we started to record yesterday.

The process is actually much simpler than it may sound: all that we need to do is give our LLM access to a tool (the ability to query a database via a function), and then, if it decides that using the tool is necessary (i.e. if it thinks it should grab data to answer our question), we’ll feed the results of the query back to another LLM prompt which is then used to answer our question.

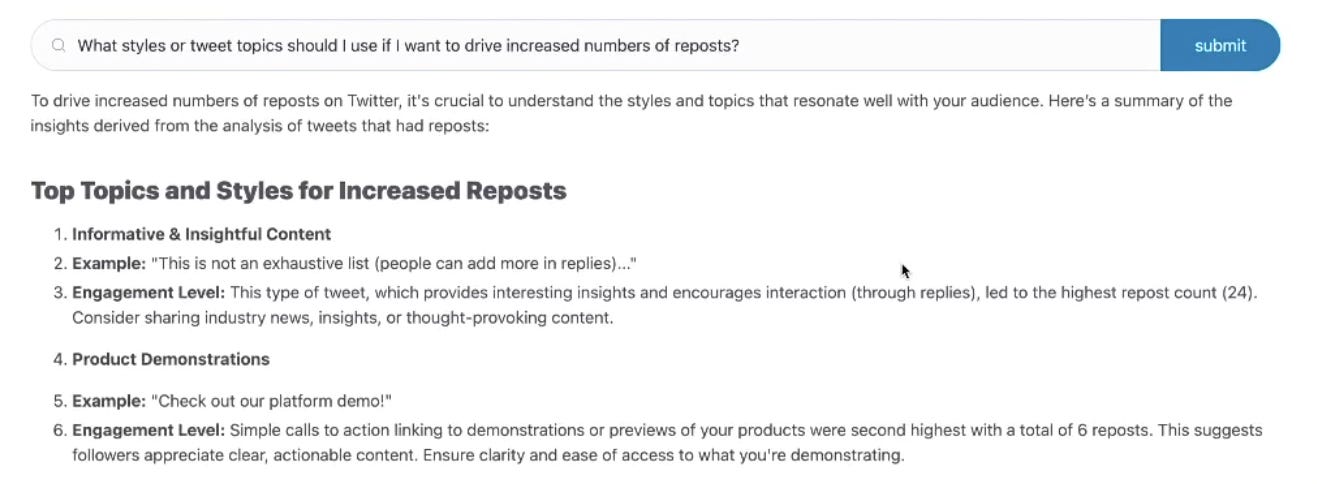

The result is a system that can learn from the data that we’ve recorded:

Now, obviously, the example shown here is in Q & A format, because I wanted to tie it back to the ChatGPT description that we talked through above. You could just as easily use the updated background data in the twitter engine, email campaign generator, or really any other aspect of the system.

The bottom line: we now have a system that can learn from the past and grow with our business.

See you tomorrow. Between now and then, let your imagination run wild!